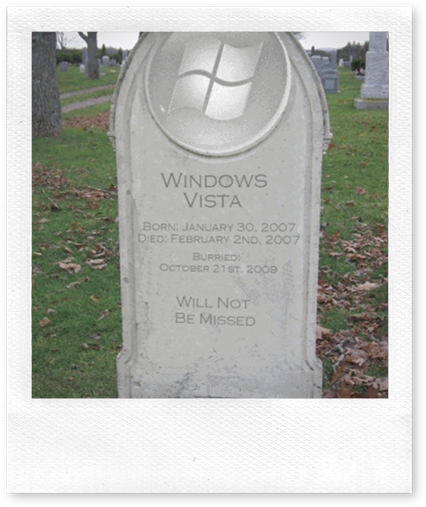

Vista, born into the Windows family early 2007, was finally laid to rest earlier this week.

Friday, October 23, 2009

Wednesday, October 21, 2009

Running MS Team System tests in NUnit

Earlier this week I saw this question on Stack Overflow asking if it was possible to run MS Team System Tests under NUnit. At first, this sounds like a really odd request. After all, why not just convert your tests to NUnit and be done with it?

I can think of a few examples where you may need interoperability – for example, two teams collaborating where team #1 has corporate policy for MSTest, while team #2 doesn’t have access to a version of Visual Studio with Team System. Being able to target one platform but have the tests run in either environment is desirable. Whatever the reason, it’s an interesting code challenge.

Fortunately, the good folks at Exact Magic Software published an NUnit addin that can adapt Team System test fixtures to NUnit. Since NUnit identifies candidate fixtures simply by examining attribute names, the addin’s job is fairly straight forward, but it also recognizes MS specific features such as TestContext and DataSource driven tests.

Unfortunately, the NUnit adapter uses features that reside within the nunit.core assembly which ties it to a specific version of NUnit. In this case, the addin will only work with NUnit 2.4.6. This is a frustrating design problem that plagues many NUnit addins, including my own open source project.

Note: NUnit 3.0 plans to solve this problem by moving the boundary between framework and test runner so that each version of the framework knows how to run it’s own tests. The GUI or host application will be able to load and execute the tests in a version independent manner. Hopefully this means that addins will target and execute within a specific framework version, but will work in different versions of the user-interface.

The current version of NUnit (2.5.2) is a stepping stone between the 2.4.x framework and the upcoming overhauled 3.0 version. As part of this transition, there’s a lot of breaking changes between older versions. For me, this translates into a problem where Exact Magic’s project simply won’t compile if you update the dependencies. Since I need to do similar work for my own project, this was good exercise.

I’ve reworked their code and put it here.

A few known caveats:

- The unit tests for the adapter attempt to perform reflection calls to internals fields or methods within the NUnit.Core that have been renamed or no longer exist. I haven’t bothered fixing these tests.

- Does not appear to have support for AssemblyInitialize or AssemblyCleanup, though these could be adapted by adding an EventListener when the test run start and finish. I may add this feature.

- The adapter doesn’t wrap functionality contained within MS Test, it simulates it’s behavior. While it has the same result, it won’t capture the exact same nuances of Microsoft’s implementation.

If you have any concerns or questions, let me know.

Cheers.

by

bryan

at

12:58 AM

2

comments

![]()

Tuesday, October 20, 2009

My current approach to Context/Specification

One of my most popular posts from last year is an article on test naming guidelines, which was written to resemble the format used by the Framework Design Guidelines. Despite the popularity of the article, I started to stray from those guidelines over the last year. While I haven’t abandoned the philosophy of those guidelines, in fact most of the general advise still applies, I’ve begun to adopt a much different approach for structuring and organizing my tests, and as result, the naming has changed slightly too.

The syntax I’ve promoted and followed for years, let’s call it TDD or original-flavor, has a few known side-effects:

- Unnecessary or complex Setup – You declare common setup logic in the Setup of your tests, but not all tests require this initialization logic. In some cases, a test requires entirely different setup logic, so the initial “arrange” portion of the test must undo some of the work done in the setup.

- Grouping related tests – When a complex component has a lot of tests that handle different scenarios, keeping dozens of tests organized can be difficult. Naming conventions can help here, but are masking the underlying problem.

Over the last year, I’ve been experimenting with a Behavior-Driven-Development flavor of tests, often referred to as Context/Specification pattern. While it addresses the side-effects outlined above, the goal of “true” BDD is to attempt to describe requirements in a common language for technical and non-technical members of an Agile project, often with a Given / When / Should syntax. For example,

Given a bank account in good standing, when the customer requests cash, the bank account should be debited

The underlying concept is when tests are written using this syntax, they become executable specifications – and that’s really cool. Unfortunately, I’ve always considered this syntax to be somewhat awkward, and how I’ve adopted this approach is a rather loose interpretation. I’m also still experimenting, so your feedback is definitely welcome.

An Example

Rather than try to explain the concepts and the coding style in abstract terms, I think it’s best to let the code speak for itself first and then try reason my way out.

Note: I’ve borrowed and bended concepts from many different sources, some I can’t recall where. This example borrows many concepts from Scott Bellware’s specunit-net.

public abstract class ContextSpecification

{

[TestFixtureSetUp]

public void SetupFixture()

{

BeforeAllSpecs();

}

[TestFixtureTearDown]

public void TearDownFixture()

{

AfterAllSpecs();

}

[SetUp]

public void Setup()

{

Context();

Because();

}

[TearDown]

public void TearDown()

{

CleanUp();

}

protected virtual void BeforeAllSpecs() { }

protected virtual void Context() { }

protected virtual void Because() { }

protected virtual void CleanUp() { }

protected virtual void AfterAllSpecs() { }

}

public class ArgumentBuilderSpecs : ContextSpecification

{

protected ArgumentBuilder builder;

protected Dictionary<string,string> settings;

protected string results;

protected override void Context()

{

builder = new ArgumentBuilder();

settings = new Dictionary<string,string>();

}

protected override void Because()

{

results = builder.Parse(settings);

}

[TestFixture]

public class WhenNoArgumentsAreSupplied : ArgumentBuilderSpecs

{

[Test]

public void ResultsShouldBeEmpty()

{

results.ShouldBeEmpty();

}

}

[TestFixture]

public class WhenProxyServerSettingsAreSupplied : ArgumentBuilderSpecs

{

protected override void Because()

{

settings.Add("server", "proxyServer");

settings.Add("port", "8080");

base.Because();

}

[Test]

public void ShouldContainProxyServerArgument()

{

results.ShouldContain("-DhttpProxy:proxyServer");

}

[Test]

public void ShouldContainProxyPortArgument()

{

results.ShouldContain("-DhttpPort:8080");

}

}

}

Compared to my original flavor, this new bouquet has some considerable differences which may seem odd to an adjusted palate. Let’s walk through those differences:

- No longer using Fixture-per-Class structure, where all tests for a class reside within a single class.

- Top level “specification” ArgumentBuilderSpecs is not decorated with a [TestFixture] attribute, nor does it contain any tests.

- ArgumentBuilderSpecs derives from a base class ContextSpecification which controls the setup/teardown logic and the semantic structure of the BDD syntax.

- ArgumentBuilderSpecs contains the variables that are common to all tests, but setup logic is kept to a minimum.

- ArgumentBuilderSpecs contains two nested classes that derive from ArgumentBuilderSpecs. Each nested class is a test-fixture for a scenario or context.

- Each Test-Fixture focuses on a single action only and is responsible for its own setup.

- Each Test represents a single specification, often only as a single Assert.

- Asserts are made using Extension methods (not depicted in the code example)

Observations

Inheritance

I’ve never been a big fan of using inheritance, especially in test scenarios as it requires more effort on part of the future developer to understand the test structure. In this example, inheritance plays a part in both the base class and nested classes, though you could argue the impact of inheritance is negated since the base class only provides structure, and the derived classes are clearly visible within the parent class. It’s a bit unwieldy, but the payoff for using inheritance is found when viewing the tests in their hierarchy:

While technically you could achieve a similar effect by using namespaces to group tests, but you lose some of the benefits of encapsulation of test-helper methods and common variables.

Although we can extend our contexts by deriving from the parent class, this approach is limited to inheriting from the root specification container (ArgumentBuilderSpecs). If you were to derive from WhenProxyServerSettingsAreSupplied for example, you would inherit the test cases from that class as well. I have yet to find a scenario where I needed to do this. While the concepts of DRY make sense, there’s a lot to be said about clear intent of test cases where duplication aids readability.

Extra Plumbing

There’s quite a bit of extra plumbing to be able to create our nested contexts, and it seems to take a bit longer to write tests. This delay is either caused by grappling with new context/specification concepts, writing additional code for subclasses or more thought determining which contexts are required. I’m anticipating that it gets easier with more practice, and some Visual Studio code snippets might simplify the authoring process.

One area where I can sense I’m slowing down is trying to determine if I should be overriding or extending Context versus Because.

Granular Tests

In this style of tests, where a class is created to represent a context, each context performs only one small piece of work and the tests serve as assertions against the outcome. I found that the tests I wrote were concise and elegant, and the use of classes to structure the tests around the context helped to organize both the tests and the responsibility of the subject under test. I also found that I wrote fewer tests with this approach – I write only a few principle contexts. If a class starts to have too many contexts, it could mean that the class has too much responsibility and should be split into smaller parts.

Regarding the structure of the tests, if you’re used to fixture-per-class style of tests, it may take some time to get accustomed to the context performing only a single action. Though as Steven Harman points out, visualizing the context setup as part of the fixture setup may help guide your transition to this style of testing.

Conclusion

I’m enjoying writing Context/Specification style tests as they read like a specification document and provide clear feedback of what the system does. In addition, when a test fails, the context it belongs to provides additional meaning around the failure.

There appear to be many different formats for writing tests in this style, and my current format is a work in progress. Let me know what you think.

Presently, I’m flip flopping between this format and my old habits. I’ve caught myself a few times where I write a flat structure of tests without taking context into consideration – after a point, the number of tests becomes unmanageable, and it becomes difficult to identify if I should be tweaking existing tests or writing new ones. If the tests were organized by context, the number of tests becomes irrelevant, and the focus is placed on the scenarios that are being tested.

by

bryan

at

9:16 AM

1 comments

![]()

Monday, October 19, 2009

Configuring the Selenium Toolkit for Different Environments

Suppose you’ve written some selenium tests using Selenium IDE that target your local machine (http://localhost), but you’d like to repurpose the same tests for your QA, Staging or Production environment as part of your build process. The Selenium Toolkit for .NET makes this really easy to change the target environment without having to touch your tests.

In this post, I’ll show you how to configure the Selenium Toolkit for the following scenarios:

- Local Selenium RC / Local Web Server

- Local Selenium RC / Remote Web Server

- Remote Selenium RC / Remote Web Server

Running Locally

In this scenario, both the Selenium Remote Control Host (aka Selenium RC / Selenium Server) and the web site you want to test are running on your local machine. This is perhaps the most common scenario for developers who develop against a local environment, though it also applies to your build server if the web server you want to test is also on that machine.

Although this configuration is limited to running tests against the current operating system and installed browsers, it provides a few advantages to developers as they can watch the tests execute or set breakpoints on the server to assist in debugging.

This is the default configuration for the Selenium Toolkit for .NET – when the tests execute, the NUnit Addin will automatically start the Selenium RC process and shut it down when the tests complete.

Assuming you have installed the Selenium Toolkit for .NET, the only configuration setting you'll need to provide is the URL of the web site you want to test. In this example, we assume that http://mywebsite is running on the local web server.

<Selenium BrowserUrl="http://mywebsite" />

Running Tests against a Remote Web Server

In this scenario Selenium RC runs local, but web server is on a remote machine. You’ll still see the browser executing the tests locally, but because the web-server is physically located elsewhere it’s not as easy to debug server-side issues. From a configuration perspective, this scenario uses the same configuration settings as above, except the URL of the server is not local.

This scenario is typically used in a build server environment. For example, the build server compiles and deploys the web application to a target machine using rsync and then uses Selenium to validate the deployment using a few functional tests.

Executing Tests in a Remote Environment / Selenium Grid

In this scenario your local test-engine executes your tests against a remote Selenium RC process. While this could be a server where the selenium RC process is configured to run as a dedicated service, it’s more likely that you would use this configuration for executing your tests against a Selenium Grid. The Selenium Grid server exposes the same API as the Selenium RC, but it acts as a broker between your tests and multiple operating systems and different browser configurations.

To configure the Selenium Toolkit to use a Selenium Grid, you’ll need to specify the location of the Grid Server and turn off the automatic start/stop feature:

<Selenium

server="grid-server-name"

port="4444"

BrowserUrl="http://mywebsite.com"

/>

<runtime

autoStart="false"

/>

</Selenium>

by

bryan

at

8:45 AM

0

comments

![]()

Thursday, October 15, 2009

A Proposal for Functional Testing

When I’m writing code, my preference is to follow test-driven development techniques where I’m writing tests as I go. Ideally, each test fixture focuses attention on one object at a time, isolating its behavior from its dependencies.

While unit tests provide us with immediate feedback about our progress, it would be foolish to deploy a system without performing some form of integration test to ensure that the systems’ components work as expected when pieced together. Often, integration tests focus on a cohesive set of objects in a controlled environment, such as a restoring a database after the test.

Eventually, you’ll need to bring all the components together and test them in real-world scenarios. The best place to bring all these components together is the live system or staged equivalent. The best tool for the job is a human inspecting the system. Period.

Wait, I thought this was supposed to be about functional testing? Don’t worry, it is.

Humans may be the best tool for the job, but if you consider the amount of effort associated with code-freezes, build-reports, packaging and deployment, verification, coordination with the client and waiting testing teams, it can be really expensive to use humans for testing. This is especially true if you deliver a failed build to your testing team -- your testing team who’ve been queued up are now unable test, and must wait for the next build. If you were to total up all the hours from the entire team, you’d be losing at least a day or more in scheduled cost.

Functional tests can help prevent this loss in production.

In addition, humans possess an understanding of what the system should do, as well as what previous versions did. Humans are users of the system and can contribute greatly to the overall quality of the product. However, once a human has tested and validated a feature, revisiting these tests in subsequent builds becomes more of a check than a test. Having to go back and check these features becomes increasingly difficult to accomplish in short-timelines as the complexity of the system grows. Invariably, shortcuts are taken, features are missed and subtle, aggravating bugs silently sneak into the system. While separation of concerns and good unit tests can downplay the need for full regression tests, the value of system-wide integration tests for repetitive tasks shouldn’t be discounted.

Functional tests can help here too, but these are largely the fruits of labor of my first point about validating builds. Most organizations can’t capitalize on this simply because they haven’t got the base to build up from.

Functional tests take a lot of criticism, however. Let’s address some common (mis)beliefs.

Duplication of testing efforts / Diminishing returns. Where teams have invested in test driven development, tests tend to focus on the backend code artifacts as these parts are the core logic of the application. Using mocks and stubs, the core logic can be tested extremely well from the database layer and up, but as unit-tests cross the boundary from controller-logic into the user-interface layer, testing becomes harder to simulate: web-applications need to concern themselves with server requests; desktop applications have to worry about things like screen resolution, user input and modal dialogs. In such team environments, testing the user-interface isn’t an attractive option since most bugs, if any, originate from the core logic that can be covered by more unit tests. From this perspective, adding functional tests wouldn’t provide enough insight to outweigh the effort involved.

I’d agree with this perspective, if the functional tests were trying to aggressively interrogate the system at the same level of detail of their backend equivalents. Unlike unit tests, functional tests are focused on emulating what the user does and sees, not on the technical aspects under the hood. They operate in the live system, providing a comprehensive view of the system that is unit tests cannot. In the majority of cases, a few simple tests that follow the happy path of the application may be all you need to validate the build.

More over, failures at this level point to problems in the build, packaging or deployment – something well beyond a typical unit test’s reach.

Functional tests are too much effort / No time for tests. This is a common view that is applied to testing in general, which is based on a flawed assumption that testing should follow after development work is done. In this argument, testing is seen as “double the effort”, which is an unfair position if you think about it. If you treat testing as a separate task and wait until the components are fully written, then without a doubt the action of writing tests becomes an exercise in reverse-engineering and will always be more effort.

Functional tests, like unit tests, should be brought into the development process. While there is some investment required to get your user-interface and its components into a test-harness, the effort to add new components and tests (should) become an incremental task.

Functional tests are too brittle / Too much maintenance. Without doubt, the user-interface can be the most volatile part of your application, as it is subject to frequent cosmetic changes. If you’re writing tests that depend on the contract of the user-interface, it shouldn’t be a surprise that they’re going to be impacted when that interface changes. Claiming that your tests are the source of extra effort because of changes you introduced is an indication of a problem in your approach to testing.

Rather than reacting to changes, anticipate them: if you have a change to make, use the tests to introduce that change. There are many techniques to accomplish this (and I may have to blog about that later), but here’s an example: to identify tests that are impacted by your change, try removing the part that needs to change and watch which tests fail. Find a test that resembles your new requirement and augment it to reflect the new requirements. The tests will fail at first, but as you add the new requirements, they’ll slowly turn green.

As an added bonus, when you debug your code through the automated test, you won’t have to endure repetitive user-actions and keystrokes. (Who has time for all the clicking??)

This approach works well in agile environments where stories are focused on adding or changing features for an interaction and changes to the user-interface are expected.

Adding Functional Testing to your Regime

Develop a test harness

The first step to adding functional testing into your project is the development of a test-harness that can launch the application and get it into a ready state for your tests. Depending on the complexity of your application and the extent of how far you want to take your functional tests, this can seem like the largest part. Fortunately most test automation products provide a “recorder” application that can generate code from user activity, which can jump start this process. While these tools make it easy to get started, they are really only suitable for basic scenarios or for initial prototyping. As your system evolves you quickly find that the duplication in these scripts becomes a maintenance nightmare.

To avoid this issue, you’ll want to model the screens and functional behavior of your application into modular, components that hide the implementation details of the recorder tools’ output. This approach shields you from having to re-record your tests and makes it easier to apply changes to tests. The downfall to this approach is that it may take some deep thinking on how to model your application, and it will seem as though you’re writing a lot of code to emulate what your backend code already does. However, once this initial framework is in place, it becomes easier to add new components. Eventually, you reach a happy place where you can write new tests without having to record anything.

The following example illustrates how the implementation details of a product editor are hidden from the test, but the user actions are clearly visible:

[Test]

public void CanOpenAnExistingProduct()

{

using (var app = new App())

{

app.Login("user1", "p@ssw3rd");

var product = new Product()

{

Id = 1,

Name = "Foo"

};

// opens the product editor,

// fills it with my values

// saves it, closes it.

app.CreateNewProduct(product);

// open the dialog, find the item

ProductEditorComponent editor = app.OpenProductEditor("Foo");

// retrieves the settings of the product from the screen

Product actual = editor.GetEntity();

Assert.AreEqual(product, actual);

}

}

Write Functional Unit Tests for Screen Components

Once you’ve got a basic test-harness, you should consider developing simple functional tests for user-interface components as you add them to your application. If you can demo it to the client, you're probably ready to start writing functional tests. A few notes to consider at this stage:

- Be pragmatic! Screen components that are required as part of base use cases will have more functional tests than non-essential components.

- Consider pairing developers with testers. As the developer builds the UI, the tester writes the automation tests that verify the UI’s functionality. Testers may guide the development of the UI to include automation ids, which reduces the amount of reverse-engineering.

- Write tests as new features or changes are introduced. No need to get too granular, just verify that the essentials.

Verify Build Process with Functional Sanity Tests

While your functional unit tests concentrate on the behaviors of individual screen components, you’ll want to augment your build process with tests that demonstrate common user-stories that can be used to validate the build. These tests mimic the minimum happy path.

If you’re already using a continuous integration server to run unit tests as part of each build, functional tests can be included at this stage but can be regulated to nightly builds or as part of the release process to your quality assurance team.

Augment QA Process

As noted above, humans are a critical part of the testing of our applications and that’s not likely to change. However, the framework that we used to validate the build can be reused by your testing team to write automation tests for their test cases. Ideally, humans verify the stories manually, then write automation tests to represent regression tests.

Tests that require repetitive or complex time consuming procedures are ideal candidates for automation.

Conclusion

Automated functional testing can add value to your project for build verification and regression testing. Being pragmatic about the components you automate and vigilant in your development process to ensure the tests remain in sync are the keys to their success.

How does your organization use functional testing? Where does it work work? What’s your story?

by

bryan

at

8:21 AM

0

comments

![]()

Wednesday, October 07, 2009

Use Windows 7 Libraries to organize your code

One of the new features that I’m really enjoying in Windows 7 is the ability to group common folders from different locations into a common organizational unit, known as a Library. I work with a lot of different code bases and tend to generate a lot of mini-prototypes, and I’ve struggled with a good way to organize them. The Library feature in Windows 7 offers a neat way to view and organize your files. Here’s how I’ve organized mine.

Create your Library

- Open windows explorer

- Bring up the context-menu on the Libraries root folder and choose “New –> Library”

- Select folders to include in your library.

While your folders can be organized from anywhere, I’ve created a logical folder “C:\Projects” and four sub-folders:

- C:\Projects\Infusion (my employer)

- C:\Projects\lib (group of common libraries I reference a lot)

- C:\Projects\Experiments (small proof of concept projects)

- C:\Projects\Personal (my pet projects)

Note that you can easily add new folders to your library by clicking on the Includes: x locations hyperlink. This dialog also lets you move folders up and down, which makes it easy to organize the folders based on your preference.

Here’s a screen capture of my library, arranged by folder with a List view.

By default, the included folders are arranged by “Folder” and behind the scenes they’re grouped by the “Folder Path” of the included folders, which gives us the headings above our included folders. A word of caution: while you can change the “Arrange by” and “view” without issue, if you change the setting for Group-by (view-> group by) there doesn’t appear to be a way to easily revert the Group-by setting back to the default. Thus, if your heading back you’ll have to manually add the “Folder Path” column and the set it to the group-by value, but the user-defined sort order of the Libraries won’t be used.

This dialog is available anywhere that uses a standard COM dialog. From within Visual Studio, this view is really helpful when adding project references, opening files and creating projects. Being able to search all of your code using in the top-right is awesome.

Add your Library to the Start Menu

There are a few hacks to put your library on to the start menu. You can pin the item to the start menu, which puts it on the left-side of the start menu. This technique requires a registry hack to allow Libraries to be pinned.

If you want to put your library into the right-hand side of the start menu, there is no native support for adding custom folders. However, you can repurpose some of the existing folders. I’ve added my library by repurposing the “Recorded TV” library, since my work PC doesn’t have any recorded TV.

Here’s how:

- Open the Control Panel

- Choose “Appearance and Personalization”

- Choose “Customize the Start Menu” under Taskbar and Start Menu.

- Turn on the “Recorded TV” option as “Display as a menu”

- Next, on the Start Menu, right-click “Recorded-TV”, remove the default folder locations and then add your own.

- Rename the “Recorded TV” to whatever you want.

Here’s a screen capture of my start menu.

by

bryan

at

8:25 AM

0

comments

![]()

Monday, October 05, 2009

Find differences between images C#

Suppose you had two nearly identical images and you wanted to locate and highlight the differences between them. Here’s a fun snippet of C# code to do just that.

While the Bitmap class includes methods for manipulating individual pixels (GetPixel and SetPixel), they aren’t as efficient as manipulating the data directly. Fortunately, we can access the low-level bitmap data using the BitmapData class, like so:

Bitmap image = new Bitmap("image1.jpg");

Rectangle rect = new Rectangle(0,0,image.Width,image.Height);

BitmapData data = image.LockBits(rect, ImageLockMode.ReadOnly, PixelFormat.Format24bppRgb);

Since we’re manipulating memory directly using pointers, we have to mark our method that locks and unlocks the bits with the unsafe keyword, which is not CLS compliant. This is only a problem if you expose this method as part of a public API and you want to share your library between C# and other .NET languages. If you want to maintain CLS compliant code, just make the unsafe method as a private member.

To find the differences between two images, we'll loop through and compare the low-level bytes of the image. Where the pixels match, we'll swap the pixel with a pre-defined colour and then later treat this colour as transparent, much like the green-screen technique used in movies. The end result is an image that contains the differences that can be transparently overlaid over top.

public class ImageTool

{

public static unsafe Bitmap GetDifferenceImage(Bitmap image1, Bitmap image2, Color matchColor)

{

if (image1 == null | image2 == null)

return null;

if (image1.Height != image2.Height || image1.Width != image2.Width)

return null;

Bitmap diffImage = image2.Clone() as Bitmap;

int height = image1.Height;

int width = image1.Width;

BitmapData data1 = image1.LockBits(new Rectangle(0, 0, width, height),

ImageLockMode.ReadOnly, PixelFormat.Format24bppRgb);

BitmapData data2 = image2.LockBits(new Rectangle(0, 0, width, height),

ImageLockMode.ReadOnly, PixelFormat.Format24bppRgb);

BitmapData diffData = diffImage.LockBits(new Rectangle(0, 0, width, height),

ImageLockMode.WriteOnly, PixelFormat.Format24bppRgb);

byte* data1Ptr = (byte*)data1.Scan0;

byte* data2Ptr = (byte*)data2.Scan0;

byte* diffPtr = (byte*)diffData.Scan0;

byte[] swapColor = new byte[3];

swapColor[0] = matchColor.B;

swapColor[1] = matchColor.G;

swapColor[2] = matchColor.R;

int rowPadding = data1.Stride - (image1.Width * 3);

// iterate over height (rows)

for (int i = 0; i < height; i++)

{

// iterate over width (columns)

for (int j = 0; j < width; j++)

{

int same = 0;

byte[] tmp = new byte[3];

// compare pixels and copy new values into temporary array

for (int x = 0; x < 3; x++)

{

tmp[x] = data2Ptr[0];

if (data1Ptr[0] == data2Ptr[0])

{

same++;

}

data1Ptr++; // advance image1 ptr

data2Ptr++; // advance image2 ptr

}

// swap color or add new values

for (int x = 0; x < 3; x++)

{

diffPtr[0] = (same == 3) ? swapColor[x] : tmp[x];

diffPtr++; // advance diff image ptr

}

}

// at the end of each column, skip extra padding

if (rowPadding > 0)

{

data1Ptr += rowPadding;

data2Ptr += rowPadding;

diffPtr += rowPadding;

}

}

image1.UnlockBits(data1);

image2.UnlockBits(data2);

diffImage.UnlockBits(diffData);

return diffImage;

}

}

An example that finds the difference between the images and then converts the matching colour to transparent.

class Program

{

public static void Main()

{

Bitmap image1 = new Bitmap(400, 400);

using (Graphics g = Graphics.FromImage(image1))

{

g.DrawRectangle(Pens.Blue, new Rectangle(0, 0, 50, 50));

g.DrawRectangle(Pens.Red, new Rectangle(40, 40, 100, 100));

}

image1.Save("C:\\test-1.png",ImageFormat.Png);

Bitmap image2 = (Bitmap)image1.Clone();

using (Graphics g = Graphics.FromImage(image2))

{

g.DrawRectangle(Pens.Purple, new Rectangle(0, 0, 40, 40));

}

image2.Save("C:\\test-2.png",ImageFormat.Png);

Bitmap diff = ImageTool.GetDifferenceImage(image1, image2, Color.Pink);

diff.MakeTransparent(Color.Pink);

diff.Save("C:\\test-diff.png",ImageFormat.Png);

}

}

by

bryan

at

8:30 AM

3

comments

![]()

Thursday, October 01, 2009

Reading Oracle Spatial data into .NET

Recently, I was tasked with pulling geo-spatial data from Oracle into an application. While Microsoft provides support for SQL Server 2008’s SqlGeometry and SqlGeography data types in the Microsoft.SqlServer.Types namespace, there is no equivalent for Oracle’s SDO_Geometry data type. We’ll need some custom code to pull this information out of Oracle.

What is Geospatial Data?

Both SQL Server and Oracle provide native data types, SqlGeometry and SDO_Geometry respectively to represent spatial data. These special fields can be used to describe a single point, or collection of points to form a line, multi-line, polygon, etc. The database technology indexes this data to create a “spatial index”, and provides native SQL functions to query for intersecting and nearby points. The result is an extremely powerful solution that serves as the basis for next-generation location-aware technologies.

Oracle stores spatial data as a Character Large Object (CLOB), and to read it, we’ll need to parse that data into a structure we can use.

User Defined types with Oracle Data Provider for .NET

Fortunately, parsing the CLOB is really quite easy as the Oracle Data Provider for .NET supports a plug-in architecture that let you define your own .NET user-defined types. I found a great starter example in the oracle forums that included user-defined types for SDO_Geometry and SDO_Point. A near identical example can be found as part of the Topology Framework .NET, which cites Dennis Jonio as the author.

Summarized, the classes appear as:

[OracleCustomTypeMappingAttribute("MDSYS.SDO_POINT_TYPE")]

public class SdoPoint : OracleCustomTypeBase<SdoPoint>

{

public decimal? X { get; set; }

public decimal? Y { get; set; }

public decimal? Z { get; set; }

/* ... details omitted for clarity */

}

[OracleCustomTypeMappingAttribute("MDSYS.SDO_GEOMETRY")]

public class SdoGeometry : OracleCustomTypeBase<SdoGeometry>

{

public decimal? Sdo_Gtype { get; set; }

public decimal? Sdo_Srid { get; set; }

public SdoPoint Point { get; set; }

public decimal[] ElemAray { get; set; }

public decimal[] OrdinatesArray { get; set; }

/* ... details omitted for clarity */

}

With these custom user-defined types, spatial data can be read from the database just like any other data type.

[Test]

public void CanFetchSdoGeometry()

{

string oracleConnection =

"Data Source=" +

"(DESCRIPTION=" +

"(ADDRESS_LIST=" +

"(ADDRESS=" +

"(PROTOCOL=TCP)" +

"(HOST=SERVER1)" +

"(PORT=1600) +

")" +

")"

"(CONNECT_DATA=" +

"(SERVER=DEDICATED)" +

"(SERVICE_NAME=INSTANCE1)"

")" +

");" +

"User Id=username;" +

"Password=password;";

using (OracleConnection cnn = new OracleConnection(oracleConnection))

{

cnn.Open();

string sql = "SELECT * FROM TABLE1";

OracleCommand cmd = new OracleCmd(sql, cnn);

cmd.CommandType = System.Data.CommandType.Text;

OracleDataReader reader = cmd.ExecuteReader();

while(reader.Read())

{

if (!reader.IsDBNull(1))

{

SdoGeometry geometry = reader.GetValue(1) as SdoGeometry;

Assert.IsNotNull(geometry);

}

}

}

}

Using SdoGeometry in your Application

Now that we have our SdoGeometry type, how do we use it? The oracle documentation provides us with some details which we can use to decipher the SDO_GEOMETRY object type.

Recall that our SdoGeometry object has five properties: Sdo_Gtype, Sdo_Srid, Point, ElemArray and OrdinatesArray.

In the most basic scenario when your geometry object refers to a single point, all the information you need is in the Point property. In all other scenarios, Point will be null and you’ll have to parse the values out of the Ordinates array.

Although the Geometry Type (sdo_gtype) property is represented as an integer, it uses a numbering format to convey the shape-type and structure of the ordinates array. The four digits of the sdo_gtype represent dltt, where:

- First digit “d” represents how many dimensions are used to represent each point, and consequently, how many bytes in the ordinates array are used to describe a point. Possible values are 2, 3 or 4.

- The second digit, “l” refers to the LRS measurement when there are more than 2 dimensions. This is typically zero for the default.

- The last two digits refer to the shape.

Since the algorithm to convert from UTM to Latitude/Longitude depends on your Projection and Datum, I don’t want to mislead anyone with the wrong algorithm. A very detailed breakdown of the formulas are listed here, including an Excel Spreadsheet that breaks down the formula. Here’s an example:

public Shape ToShape(SdoGeometry geometry)

{

Shape shape = new Shape();

if (geometry.Point != null)

{

LatLong point = ReadPoint(geometry.Point);

shape.Add(point);

shape.shapeType = Constants.ShapeType.Point;

}

else

{

// GTYPE is represented as dltt where:

// d = number of dimensions to the data

// l = LRS measure value (default is zero)

// tt = shape type

string gType = geometry.Sdo_Gtype.Value.ToString();

int dimensions = Convert.ToInt32(gType.Substring(0, 1));

int lrsMeasureValue = Convert.ToInt32(gType.Substring(1, 1));

int shapeType = Convert.ToInt32(gType.Substring(3));

// convert tt value to a custom enum

shape.shapeType = GetShapeType(shapeType);

LatLongs points = ReadPoints(geometry.OrdinatesArray, dimensions);

shape.Points.Add(points);

}

return shape;

}

private LatLongs ReadPoints(decimal[] ordinates, int dimensions)

{

LatLongs points = new LatLongs();

for (int pIndex = 0; pIndex < ordinates.Length; pIndex += dimensions)

{

double lat = (double)ordinates[pIndex];

double lng = (double)ordinates[pIndex + 1];

LatLong latLong = ToLatLong(lat, lng);

points.Add(latLong);

}

return points;

}

by

bryan

at

8:14 AM

2

comments

![]()