Having automation to perform common tasks is great. Having that automation run on a regular basis in the cloud is awesome.

Today, I'd like to expand upon the sweet Azure CLI script to manage Azure DevOps User Licenses I wrote and put it in a Azure Pipeline. The details of that automation script are outlined in my last post, so take the time to check that out if you're interested, but to recap: my azure cli script activates and deactivates Azure DevOps user licenses if they’re not used. Our primary focus in this post will outline how you can configure your pipeline to run your az devops automation on a reoccurring schedule.

Table of Contents

- About Pipeline Security

- Setup a PAT Token

- Secure Access to Tokens

- Create the Pipeline

- Define the Schedule

- Authenticate using the PAT Token

- Run PowerShell Script from ADO

- Combining with Azure CLI

About Pipeline Security

When our pipelines run, they operate by default using a project-specific user account: <Project Name> Build Service (<Organization Name>). For security purposes, this account is restricted to information within the Project.

If your pipelines need to access details beyond the current Project they reside in, for example if you a pipeline that needs access to repositories in other projects, you can configure the Pipeline to use the Project Collection Build Service (<Organization Name>). This change is subtly made by toggling off the "Limit job authorization scope to current project for non-release pipelines" (Project Settings -> Pipelines : Settings)

In both Project or Collection level scenarios, the security context of the build account is made available to our pipelines through the $(System.AccessToken) variable. There's a small trick that's needed to make the access token available to our PowerShell scripts and I'll go over this later. But for the most part, if you're only accessing information about pipelines, code changes or details about the project, the supplied Access Token should be sufficient. In scenarios where you're trying to alter elements in the project, you may need to grant some additional permissions to the build service account.

However, for the purposes of today's discussion, we want to modify user account licenses which requires the elevated permissions of a Project Collection Administrator. I need to stress this next point: do not place the Project Collection Build Service in the Project Collection Administrators group. You're effectively granting any pipeline that uses this account full access to your organization. Do not do this. Here by dragons.

Ok, so if the $(System.AccessToken) doesn't have the right level of access, we need an alternate access token that does.

Setup a PAT Token

Setting up Personal Access Tokens is a fairly common activity, so I'll refer you to this document on how the token is created. As we are managing users and user licenses, we need a PAT Token created by a Project Collection Administrator with the Member Entitlement Management scope:

Secure Access to Tokens

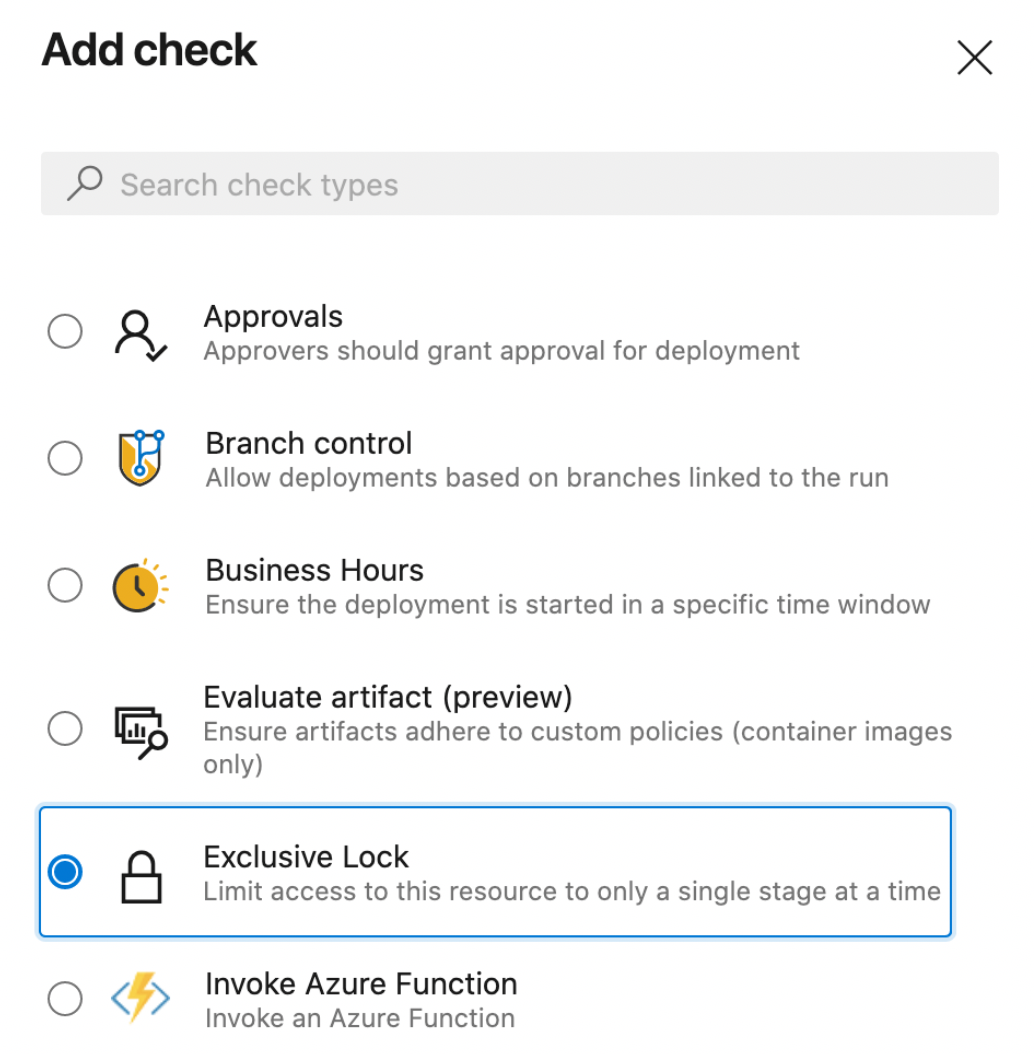

Now that we have the token that can manage user licenses, we need to put it somewhere safe. Azure DevOps offers a few good options here, each with increasing level of security and complexity:

- Place the token as a secret in the Pipeline

- Place the token in a Variable Group

- Place the token in a Variable Group that uses an Azure KeyVault

- Use a marketplace extension to fetch the secret from an alternate secrets provider (eg HashiCorp KeyVault)

My personal go-to are Variable Groups because they can be shared across multiple pipelines. Variable Groups also have their own Access Rights, so the owner of variable group must authorize which pipeline and users are allowed to use your secrets.

For our discussion, we'll create a variable group "AdminSecrets" with a variable "ACCESS_TOKEN".

Create the Pipeline

With our security concerns locked down, let's create a new pipeline (Pipelines -> Pipelines -> New Pipeline) with some basic scaffolding that defines both the machine type and access to our variable group that has my access token.

name: Manage Azure Licenses trigger: none pool: vmimage: 'ubuntu-latest' variables: - group: AdminSecrets

I want to call out that by using a Linux machine, we're using PowerShell Core. There are some subtle differences between PowerShell and PowerShell Core, so I would recommend that you always write your scripts locally against PowerShell Core.

Define the Schedule

Next, we'll setup the schedule for the pipeline using a cron job schedule syntax.

We'll configure our pipeline to run every night as midnight:

schedules:

# run at midnight every day

- cron: "0 0 * * *"

displayName: Check user licenses (daily)

branches:

include:

- master

always: true

By default, schedule triggers only run if there are changes, so we need to specify "always: true" to have this script run consistently.

Authenticate Azure DevOps CLI using PAT Token

In order to invoke our script that uses az devops functions, we need to setup the Azure DevOps CLI to use our PAT Token. As a security restriction, Azure DevOps does not make secrets available to scripts so we need to explicitly pass in the value as an environment variable.

- script: |

az extension add -n azure-devops

displayName: Install Azure DevOps CLI

- script: |

echo $(ADO_PAT_TOKEN) | az devops login

az devops configure --defaults organization=$(System.CollectionUri)

displayName: Login and set defaults

env:

ADO_PAT_TOKEN: $(ACCESS_TOKEN)

Run PowerShell Script from ADO

Now that our pipeline has the ADO CLI installed, we're authenticated using our secure PAT token, our last step is to invoke the powershell script. Here I'm using the pwsh task to ensure that PowerShell Core is used. The "pwsh" task is a shortcut syntax for the standard powershell task.

Our pipeline looks like this:

name: Manage Azure Licenses

trigger: none

schedules:

# run at midnight every day

- cron: "0 0 * * *"

displayName: Check user licenses (daily)

branches:

include:

- master

always: true

pool:

vmImage: 'ubuntu-latest'

variables:

- group: AdminSecrets

steps:

- script: |

az extension add -n azure-devops

displayName: Install Azure DevOps CLI

- script: |

echo $(ADO_PAT_TOKEN) | az devops login

az devops configure --defaults organization=$(System.CollectionUri)

displayName: Login and set defaults

env:

ADO_PAT_TOKEN: $(ACCESS_TOKEN)

- pwsh: .\manage-user-licenses.ps1

displayName: Manage User Licenses

Combining with the Azure CLI

Keen eyes may recognize that my manage-users-licenses.ps1 from my last post also used the Azure CLI to access Active Directory, and because az login and az devops login are two separate authentication mechanisms, the approach described above won’t work in that scenario. To support this, we’ll also need:

- A service-connection from Azure DevOps to Azure (a Service Principal with access to our Azure Subscription)

- Directory.Read.All role assigned to the Service Principal

- A script to authenticate us with the Azure CLI.

The built-in AZ CLI Task is probably our best option for this, as it provides an easy way to work with our Service Connection. However, because this task clears the authentication before and after it runs, we have to change our approach slightly and execute our script logic within the script definition of this task. The following shows an example of how we can use both the Azure CLI and the Azure DevOps CLI in the same task:

- task: AzureCLI@2

inputs:

azureSubscription: 'my-azure-service-connection'

scriptType: 'pscore'

scriptLocation: 'inlineScript'

inlineScript: |

echo $(ACCESS_TOKEN) | az devops login

az devops configure --defaults organization=$(SYSTEM.COLLECTIONURI) project=$(SYSTEM.TEAMPROJECT)

az pipelines list

az ad user list

If we need to run multiple scripts or break-up the pipeline into smaller tasks as I illustrated above, we’ll need a different approach where we have more control over the authenticated context. I can dig into this in another post.

Wrap Up

As I’ve outlined in this post, we can take simple PowerShell automation that leverages the Azure DevOps CLI and run it within an Azure Pipeline securely and on a schedule.

Happy coding.